The Power Behind AI: America’s Trillion-Dollar Bet on HPC Data Centers

As artificial intelligence reshapes the global economy, a fierce competition for computational supremacy is unfolding across America. The nation’s electricity grid may prove the ultimate bottleneck.

In the plains of Louisiana, a data center of unprecedented scale is taking shape. When complete in 2030, Meta’s newest facility will consume over 2 gigawatts of electricity—roughly equivalent to the power needs of 1.5 million homes. This is no ordinary digital warehouse, but a specialized high-performance computing (HPC) center designed to handle the voracious computational demands of artificial intelligence.

Meta is hardly alone in this building spree. Across America, tech giants are constructing computational fortresses on a scale that would have seemed fanciful just a decade ago. Microsoft plans to spend $80 billion in 2025 alone, largely on scaling AI infrastructure. Amazon’s projected capital expenditure may exceed $100 billion. The collective spending on digital infrastructure by America’s tech titans is approaching $320 billion annually—roughly equivalent to Finland’s entire GDP.

The New Industrial Revolution

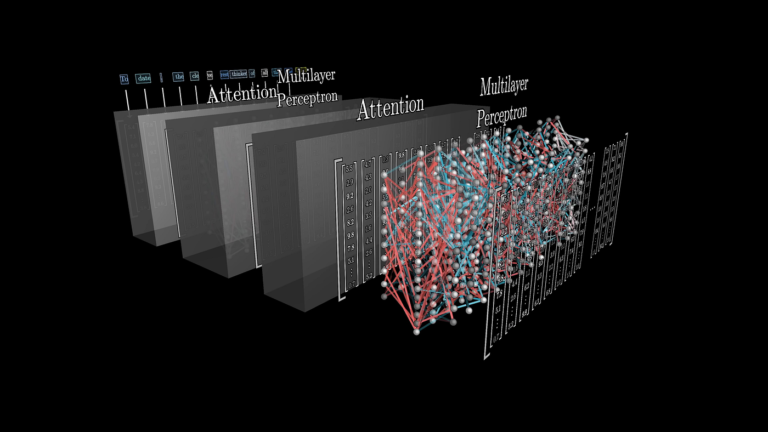

America’s HPC landscape already dwarfs that of any other nation. Of its 5,300 data centers, only several hundred are dedicated to the intense computational tasks of scientific simulations and AI model training and inference. These facilities house specialized accelerators—primarily graphics processing units (GPUs) and AI-specific chips (AI ASICs)—that form the backbone of modern computing power.

NVIDIA’s GPUs dominate this technological arms race, accounting for 70-80% of the AI accelerator market, and >98% of all advanced GPUs. Its high-end H100 data center GPUs power everything from climate models to large language models, though competitors like AMD are gaining ground in government supercomputers. Meanwhile, tech giants like Google deploy millions of their own custom AI chips, known as Tensor Processing Units (TPUs), with Amazon deploying their Tranium and Inferentia chips, and Meta likewise developing its own silicon.

These computational fortresses are insatiable energy consumers. Frontier, one of the world’s leading supercomputers at Oak Ridge National Laboratory, draws 21 megawatts of power. Modern GPU-accelerated racks can draw 50-100 kilowatts each—an order of magnitude beyond traditional data center designs.

This concentration of computing power generates immense heat, necessitating advanced cooling solutions. Air cooling proves insufficient beyond certain thresholds, driving a shift toward liquid-based alternatives—where coolant is piped directly to cold plates on processors or entire servers are submerged in dielectric fluid.

The Major Players

A handful of organizations dominate America’s HPC landscape, each with distinct strategies:

- Meta (Facebook) is rapidly converting its infrastructure for AI workloads, investing $60-65 billion in 2024-25 primarily on data centers and servers. It aims to deploy 1.3 million GPUs by the end of 2025 and is building giant hyperscale campuses, including the 2+ gigawatt Louisiana facility.

- Microsoft, through its Azure cloud and partnership with OpenAI, plans to spend $80 billion in 2025 to build AI data centers—over half in the U.S. It deploys thousands of NVIDIA GPUs for OpenAI while also developing its own AI chips (Project Athena).

- Amazon Web Services, the largest cloud provider, uses NVIDIA GPUs alongside its custom silicon: AWS Trainium chips for training AI models and Inferentia for inference. Its 2025 capital expenditure is projected around $100 billion.

- Google operates some of the most advanced HPC data centers for both internal needs and cloud customers. It runs NVIDIA GPUs for external services while heavily using its TPU chips internally. By one analysis, Google’s aggressive deployment of custom TPUs made it the third-largest data center chip supplier in 2023.

- CoreWeave, a specialized cloud provider focused entirely on GPU computing, has quickly expanded to operate 15 data centers in the U.S. and secured large investments to fuel its buildout.

- Government and National Labs host some of the most advanced HPC data centers for scientific computing. Oak Ridge National Lab operates Frontier, the world’s first exascale supercomputer, while Lawrence Livermore National Lab recently deployed El Capitan with a peak performance of 2 exaFLOPS.

Major Deployments

- Frontier (Oak Ridge National Lab): The world’s first exascale supercomputer with over 37,000 AMD Instinct MI250X GPUs delivering 1.1 exaflops.

- Aurora (Argonne National Lab): Features 63,744 Intel “Ponte Vecchio” GPUs achieving ~1.0 exaflops Linpack and 10.6 exaflops on AI benchmarks.

- El Capitan (Lawrence Livermore): Using 4× AMD Instinct MI300A APUs per node, delivering 1.74 exaflops Linpack (2.79 exaflops peak).

- Meta AI SuperClusters: Two new supercomputing clusters, each with 24,576 NVIDIA H100 GPUs, built to train next-generation large language models.

- Cloud AI Supercomputers: Major cloud providers host large GPU/TPU clusters as part of their services, with Microsoft building a flagship AI supercomputer for OpenAI with over 10,000 GPUs.

The Road Ahead: Three Scenarios

Forecasting America’s HPC footprint through 2030 involves considerable uncertainty. Three scenarios emerge:

Expected Scenario (Base Case)

By 2030, America might host approximately 1,000 HPC-capable data centers (up from a few hundred today). These facilities would house roughly 10 million GPUs and AI accelerators—a fivefold increase from 2025. Collectively, they might consume 50-60 gigawatts of power—approximately the peak electricity demand of California.

Realistic Scenario (1.5× Growth)

Under moderately accelerated growth, America could develop around 1,500 HPC-grade data centers by 2030. These would deploy approximately 15 million accelerators and consume 75-80 gigawatts of electricity—a 5-6× increase from 2023 levels.

Optimistic Scenario (2× Growth)

In a scenario of explosive growth, America might approach 2,000 HPC data centers by 2030. This expansion would accommodate roughly 20 million GPUs and AI accelerators, consuming approximately 100 gigawatts of power—equivalent to the electrical capacity of some small nations.

The Constraints

Several factors may temper this growth. Power availability represents the most significant constraint. In Northern Virginia, America’s largest data center hub, utility Dominion Energy has warned that parts of Loudoun County might face delays for new data center connections due to demand growth. Some local governments have begun limiting or scrutinizing new data center projects over power and environmental concerns.

Cooling technology presents another challenge. Traditional cooling methods use substantial water—hundreds of thousands of gallons daily per facility—which faces increasing scrutiny in water-scarce regions. Advanced cooling technologies like immersion cooling may become essential for extremely high-density deployments.

Cost Analysis

While advanced cooling technologies require higher initial investment, their operating efficiency leads to better total cost of ownership (TCO) over time. The index shows that despite air cooling having the lowest upfront cost, its higher energy usage and density limitations lead to higher cumulative costs by year 3. Immersion cooling’s premium is justified for high-density deployments above 100kW per rack, while direct liquid cooling offers the best balance for many deployments.

Key factors in TCO

Initial capital, energy costs, maintenance requirements, density capabilities, and hardware longevity due to thermal management.

The supply of cutting-edge semiconductors could become a chokepoint. In 2021-2023, chip shortages affected multiple industries, with packaging technology for high-bandwidth GPU memory (HBM) emerging as a specific bottleneck for AI hardware. The industry will need significant expansion of production capacity for advanced GPUs and ASICs to meet projected demand.

Supply Chain Analysis

The semiconductor supply chain represents the greatest vulnerability, with critical dependences on TSMC and Samsung for advanced logic chips. Power infrastructure is the second most critical constraint, particularly in high-density regions like Northern Virginia where grid capacity is approaching limits.

Human capital limitations may also slow growth. There’s a documented shortage of skilled data center staff—from construction engineers to facility operators. The largest contractors are adapting by standardizing designs and prefabricating modules off-site, but personnel constraints remain.

The Construction Process

Developing an HPC data center typically takes 18-36 months from planning to commissioning. Site selection weighs factors like proximity to power and fiber networks, geological stability, climate, and local incentives. Permitting can include environmental impact assessments, zoning approvals, and coordination with utilities.

Modern HPC centers favor modular designs—composed of identical halls or pods, each supporting perhaps 10 megawatts of IT load. This approach simplifies construction and future expansion. Specialized contractors including Holder Construction, Turner Construction, DPR, HITT, and others bring expertise in fast-tracking schedules and coordinating the various trades involved.

The Future Landscape

By 2030, whether America reaches the base case or the optimistic scenario, it will have significantly expanded its computational infrastructure. This digital backbone will likely cement computing as a foundational utility of modern society—as essential as electricity or transportation.

The challenge now lies in building these digital “power plants” responsibly, balancing innovation with considerations of energy, supply chain constraints, and equitable access. As the computational arms race accelerates, America’s advantage may ultimately depend less on raw investment figures and more on its ability to solve the complex engineering challenges of power, cooling, and infrastructure at unprecedented scales.

The race to construct America’s computational fortresses represents more than a mere infrastructure boom. It marks a fundamental restructuring of the digital economy, where processing power—not data alone—becomes the paramount currency of technological supremacy.

For investors, the implications extend far beyond the obvious plays in semiconductor manufacturing and hyperscale operators. The most sophisticated allocators will recognize that this computational arms race creates concentric circles of opportunity.

Yet prudence demands acknowledging the risks. The optimistic scenario of 100 gigawatts of AI-dedicated power by 2030 would require infrastructure investment rivaling America’s interstate highway system construction.

Should AI fail to generate commensurate economic returns, this could become a cautionary tale of malinvestment. Regulatory intervention regarding power consumption, water usage, or monopolistic practices could similarly reshape return profiles.

As electricity became the universal utility of the 20th century, computation is becoming the universal utility of the 21st. Those who control its generation, distribution, and application will wield influence akin to the oil barons of the previous century. Capital allocation decisions made today will determine which firms emerge as the digital dynasties of tomorrow’s economic order.

Any charts, graphics, projections, and forecasts included in this Presentation are presented for illustrative purposes only, in order to provide information and context. None of the statements or information contained in this Presentation constitute investment performance, nor should the inclusion of any information be treated as indicative of, or a proxy for, the investment performance of IronArc.

Works Cited

“Frontier supercomputer debuts as world’s fastest, breaking exascale barrier.” Oak Ridge National Laboratory, 30 May 2022. https://www.ornl.gov/news/frontier-supercomputer-debuts-worlds-fastest-breaking-exascale-barrier.

Jafari, Taiba, et al. “Projecting the Electricity Demand Growth of Generative AI Large Language Models in the US.” Center on Global Energy Policy, Columbia University, 17 July 2024. https://www.energypolicy.columbia.edu/projecting-the-electricity-demand-growth-of-generative-ai-large-language-models-in-the-us/.

Lee, Vivian, et al. “Breaking Barriers to Data Center Growth.” Boston Consulting Group, 20 Jan. 2025. https://www.bcg.com/publications/2025/breaking-barriers-data-center-growth.

OpenAI. “Announcing The Stargate Project.” OpenAI, 21 Jan. 2025. https://openai.com/index/announcing-the-stargate-project.

Prickett Morgan, Timothy. “HPC Market Bigger Than Expected, And Growing Faster.” The Next Platform, 19 Nov. 2024. https://www.nextplatform.com/2024/11/19/hpc-market-bigger-than-expected-and-growing-faster/.

Ramachandran, Karthik, et al. “As generative AI asks for more power, data centers seek more reliable, cleaner energy solutions.” Deloitte Insights, 19 Nov. 2024. https://www2.deloitte.com/us/en/insights/industry/technology/technology-media-and-telecom-predictions/2025/genai-power-consumption-creates-need-for-more-sustainable-data-centers.html.

Shehabi, Arman, et al. 2024 United States Data Center Energy Usage Report. Lawrence Berkeley National Laboratory, 19 Dec. 2024. https://eta.lbl.gov/publications/2024-lbnl-data-center-energy-usage-report.

Smith, Karl, et al. The AI Power Surge: Growth Scenarios for GenAI Datacenters Through 2030. Center for Strategic and International Studies, 3 Mar. 2025. https://www.csis.org/analysis/ai-power-surge-growth-scenarios-genai-datacenters-through-2030.

Srivathsan, Bhargs, et al. “AI power: Expanding data center capacity to meet growing demand.” McKinsey & Company, 29 Oct. 2024. https://www.mckinsey.com/industries/tmt/our-insights/ai-power-expanding-data-center-capacity-to-meet-growing-demand.

Swinhoe, Dan, and Zachary Skidmore. “Meta announces 4 million sq ft, 2GW Louisiana data center campus.” Data Center Dynamics, 5 Dec. 2024. https://www.datacenterdynamics.com/en/news/meta-announces-4-million-sq-ft-louisiana-data-center-campus/.