Beyond Compute: Why Data Became AI’s Ultimate Constraint

A fundamental constraint is emerging in AI today. While companies race to build larger models and buy more GPUs, the real bottleneck has become something far more mundane: quality training data.

Three critical takeaways reshape how investors must evaluate AI companies:

- Mathematical constraints create an inevitable data ceiling. The 20-to-1 rule reveals that optimal AI training requires 20 tokens per model parameter. A 1 trillion parameter model would demand 20 trillion tokens—approaching twice all high-quality text available globally. This mathematical reality, not market trends, explains why data has become the primary constraint after compute.

- Token processing efficiency drives competitive advantage. Microsoft processes 100 trillion tokens quarterly, Google handles 480 trillion monthly, and these volumes directly correlate with revenue growth. Companies optimizing token utilization gain sustainable cost advantages as investors begin valuing firms on token economics rather than traditional software metrics.

- Proprietary data creates unbreachable moats, and companies are building walls. Unlike algorithms or compute access, unique datasets cannot be purchased or replicated. Tesla’s 50 billion miles of driving data and Bloomberg’s financial archives represent irreplaceable strategic assets. Companies now recognize this value, systematically restricting API access and withdrawing from data-sharing partnerships to preserve competitive advantages within their own ecosystems.

Why this matters? AI progress relies on three scaling factors: computing power, model architecture, and data. With the first two scaling rapidly, data is emerging as the primary constraint. Research suggests we are approaching a “token ceiling” where demand for high-quality training data will exceed available supply, with quality text growing only approximately 5% annually while model data requirements increase exponentially.

For investors, understanding a company’s data strategy has become as critical as evaluating its AI research capabilities or compute resources. This data constraint directly impacts competitive positioning and investment outcomes, making token economics a new essential skill for business leaders evaluating AI opportunities.

The Three Pillars of AI Progress

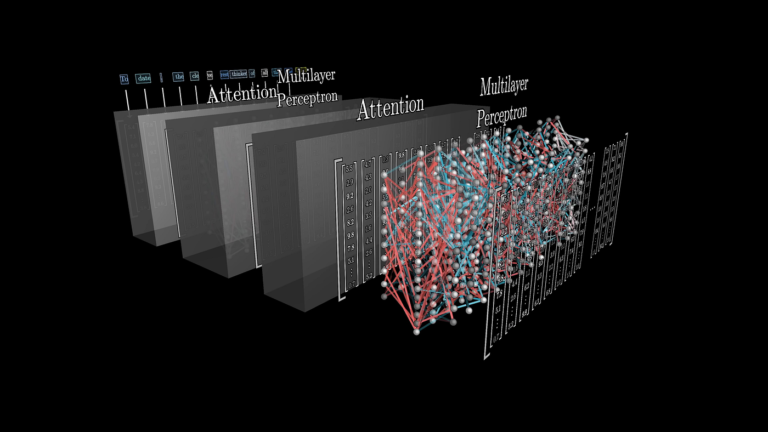

Modern AI’s remarkable advances rest upon three pillars: increasingly powerful computing infrastructure, algorithmic innovations, and massive datasets. This scaling law triad has driven breakthroughs from image recognition to large language models.

For years, compute was considered the primary limiting factor. Companies like NVIDIA rode this wave to trillion-dollar valuations by supplying the specialized chips needed for AI training. Meanwhile, vast pools of data seemed readily available by scraping the internet.

However, research reveals this foundational assumption is changing, creating new strategic imperatives for companies competing in AI markets. Understanding these mathematical constraints becomes critical for investors because they determine which business strategies will succeed as the industry approaches fundamental scaling limits.

The Coming Data Crunch

Academic research reveals a concerning reality: we are approaching limitations in high-quality data to feed increasingly hungry AI models. This data availability challenge represents a significant constraint facing AI development in the coming years.

The numbers demonstrate the scope of this challenge. Between 2018 and 2023, the largest trained model grew from approximately 340 million parameters (BERT) to over 500 billion (PaLM), representing a 1,000-fold increase. While training data volumes also expanded dramatically, academic studies show they have not kept pace with optimal model growth requirements.

Some researchers estimate the total stock of high-quality English text at approximately 10 trillion tokens. Meanwhile, academic analysis indicates that training a large model like PaLM-540B optimally would require 10.8 trillion tokens, approaching the limits of what exists. One influential study suggests that “we may run out of new data between 2023 and 2027” under current trajectory assumptions.

This scarcity creates a three-pronged challenge that directly impacts business strategy and competitive positioning. The research identifies several concerning trends, though breakthrough innovations could change these dynamics. Quality degradation is emerging as companies exhaust the highest-quality content sources such as Wikipedia, published books, and academic papers. Access restrictions compound the problem as 25% of the highest quality data is no longer available to Common Crawl web scraping due to publishers restricting access. Freshness challenges emerge because models quickly become outdated without continuous data refreshes, requiring ongoing data collection strategies that must navigate evolving legal and regulatory landscapes.

These constraints explain why companies are dramatically shifting capital allocation from pure compute investments toward data acquisition and processing capabilities.

The Math Problem Behind AI’s Future

Two landmark academic studies created the mathematical foundation driving today’s AI investment strategies and revealed sustainability challenges.

OpenAI’s 2020 scaling laws paper showed that bigger models reliably deliver better performance according to predictable mathematical relationships. This gave executives confidence to invest billions in scale. DeepMind’s 2022 Chinchilla study then refined these mathematical relationships, revealing the “20-to-1 Rule”: their research shows that each model parameter requires approximately 20 tokens of training data for peak performance.

These mathematical relationships explain why major tech companies are spending $60-80 billion annually on AI infrastructure. The research demonstrated predictable returns on scaling investments.

The constraint: Current research indicates that a 1 trillion parameter model would demand 20 trillion tokens, approaching twice all high-quality text available globally. This creates a mathematical ceiling, not just an economic one.

Understanding these relationships becomes essential for investors evaluating AI ventures. Academic analysis shows that a 1 billion parameter model requires roughly 20 billion tokens for optimal training, representing approximately 40,000 books. Studies indicate that a 100 billion parameter model needs approximately 2 trillion tokens, equivalent to roughly 1.5 million books or the entire contents of several major university libraries.

Academic research demonstrates that many large models have been under-trained relative to their potential capabilities. GPT-3, with 175 billion parameters, was trained on only 300 billion tokens, roughly 1.7 tokens per parameter, significantly below the optimal ratio.

As models scale larger, the data requirements grow linearly but the available pool of high-quality data remains essentially fixed. This creates an inevitable collision point where optimal training becomes challenging with existing data sources, constraining the scaling approach that has driven AI progress, though breakthrough efficiency innovations could alter these dynamics.

Quality Beats Scale

In this constrained environment, data quality has become paramount. A key insight from DeepMind’s “Chinchilla” research showed that many large models were under-trained on data: a 70B parameter model trained on 4× more data outperformed a 175B model trained on less.

This finding fundamentally shifts competitive strategy from acquisition-based to curation-based approaches. Research demonstrates that not all data provides equal training value. Academic studies show that curating high-quality, diverse data delivers better model performance than blindly scraping for volume. Cleaned and filtered datasets like Google’s C4 (a 750GB refined subset of Common Crawl) achieve better accuracy than raw, unfiltered web dumps.

The economics of this shift are profound. AI companies are devoting significant resources to data engineering, assembling, cleaning, and augmenting datasets, rather than simply scaling compute or tweaking algorithms. This represents a fundamental shift in resource allocation within AI development budgets.

These resource allocation changes directly impact competitive positioning, as companies with superior data curation capabilities gain sustainable advantages over those focused purely on scale.

The New Unit of AI Economics

Recent earnings calls from major technology companies reveal a profound shift: computational intelligence, measured in tokens, has become a standardized unit of economic value in the AI era.

Microsoft reported in their Q3 2025 earnings that the company processed “over 100 trillion tokens this quarter, up 5X year-over-year” through their Azure AI services. This represents one of the most significant disclosed token processing volumes in the industry and demonstrates the exponential growth in AI workload demands across enterprise customers.

Google’s transformation is equally striking. CEO Sundar Pichai revealed during their Q1 2025 earnings call that the company now processes “over 480 trillion tokens monthly” across products and APIs compared to 9.7 trillion tokens monthly the previous year, a 50-fold increase. Additionally, Pichai disclosed that “more than 30% of all new code at Google is generated by AI”, demonstrating how token processing directly translates to productivity gains.

The scale of these operations provides insight into the economic magnitude of the token economy. Microsoft’s token processing growth coincides with their AI business approaching a $10 billion annual revenue run rate, while Google’s 50x token processing increase correlates with significant improvements in their cloud services margins.

Meta CEO Mark Zuckerberg announced in January 2025 that the company plans to invest “$60 billion to $65 billion in capital expenditures this year” specifically for AI infrastructure, representing a significant jump from their estimated $38-40 billion in 2024.

The emergence of token processing volumes as key business metrics signals a fundamental shift in how computational intelligence is measured, managed, and monetized. As one Wall Street analyst recently noted, “We’re now tracking token growth rates the way we used to track monthly active users. It’s become that central to valuation models.”

Who Wins the Data Wars

The data availability challenge is reshaping competitive dynamics, creating new categories of winners and losers based on data access rather than traditional technology capabilities.

Proprietary Data Becomes the Ultimate Moat

Companies with unique, high-quality datasets possess strategic advantages. These proprietary data assets function as competitive moats because they cannot be purchased, replicated, or circumvented through traditional business development approaches.

Tesla maintains an enormous driving telemetry advantage with 5+ million cars each collecting video and sensor data daily, amounting to over 50 billion miles driven on Autopilot by 2023. Competing autonomous vehicle companies such as Waymo and Cruise possess significantly smaller datasets, with Waymo recording only tens of millions of autonomous miles by 2020.

Bloomberg leverages a comprehensive archive of financial documents, news, pricing data, and private communications from the Bloomberg Terminal platform. They utilized this proprietary dataset to train BloombergGPT, creating a financial AI model that competitors cannot replicate due to Bloomberg’s unique position as the dominant financial data platform.

Healthcare providers operate in a highly regulated environment where proprietary patient datasets provide sustainable advantages for AI applications, particularly given privacy regulations that prevent data sharing across organizational boundaries.

When Regulation Becomes Strategy

The data availability challenge has intersected with evolving regulatory and legal frameworks to create new competitive dynamics. Forward-thinking companies are leveraging regulatory complexity as strategic moats rather than viewing these constraints as compliance obligations.

Copyright and intellectual property constraints have emerged as significant factors affecting data availability. Major publishers including The New York Times, Getty Images, and book publishers have filed lawsuits challenging the use of copyrighted content in AI training. These legal challenges have created uncertainty around fair use applications and are forcing AI companies to reconsider their data acquisition strategies.

Privacy regulations including GDPR, CCPA, and emerging state-level privacy laws restrict the use of personal data in AI training. These regulations require companies to implement privacy-by-design approaches and limit access to valuable datasets containing personal information, such as social media content and user-generated data.

Content licensing and partnership strategies have become essential competitive tools. Companies are negotiating exclusive licensing deals with content creators, publishers, and data providers to secure training data access. Microsoft’s partnership with news publishers, OpenAI’s licensing agreements with content creators, and Meta’s deals with social media platforms represent strategic moats that competitors cannot easily replicate.

Industry analysis indicates that copyright enforcement will restrict access to 15-25% of currently available high-quality training data over the next three years. Academic research shows that privacy regulations will limit access to an additional 10-15% of datasets containing personal information.

New Data Sourcing Strategies

To overcome limitations, organizations are exploring innovative approaches that balance effectiveness with regulatory compliance. Synthetic data generation involves creating artificial data through simulation or AI-generated training examples. While powerful for addressing data scarcity, this approach carries risks of “model collapse” if AI systems train primarily on other AI outputs, though breakthrough innovations in this area could significantly change the landscape.

Retrieval-augmented generation (RAG) represents a significant architectural shift where models rely on external tools to fetch information dynamically rather than encoding every fact in their parameters, reducing training data requirements.

Continuous training and human-in-the-loop systems are replacing static training approaches. Companies now implement regular refresh cycles and incorporate expert knowledge directly into training processes. Industry reports show that these human feedback systems improve response quality by 20-25% while reducing computational requirements.

Investment Implications: Follow the Data

For investors, understanding data dynamics has become critical for evaluating AI ventures, requiring fundamental changes in due diligence processes and valuation methodologies.

Data quality due diligence has become essential when evaluating AI companies. Investors must scrutinize data acquisition strategies by asking specific questions: What unique data does the company possess? How was it obtained legally and with appropriate consent? Can competitors easily replicate the dataset? What regulatory risks does the data strategy present?

Capital allocation shifts reflect the new reality as expenditures that once went primarily into model research and development and compute infrastructure are being directed toward data acquisition, curation, and processing infrastructure. Startups focused on data labeling, cleaning, and synthetic data generation have become critical parts of the AI value chain and represent emerging investment opportunities.

Data flywheels create sustainable competitive advantages through network effects where more users generate more data, which enables better models, which attract more users. Google Search exemplifies this dynamic where each query and click refines ranking algorithms. Companies establishing such flywheels deserve premium valuations due to their self-reinforcing competitive advantages.

Token economics expertise has emerged as a required competency for investors evaluating AI companies. As token processing volumes become standardized business metrics, investors need expertise in evaluating token utilization efficiency, processing growth rates, and cost optimization strategies.

Conclusion: Data as Strategic Capital

The AI industry has entered a new phase where data, not just algorithms or compute, increasingly determines competitive outcomes. For investors, this shifts the evaluation framework: the most valuable AI companies will be those that secure sustainable data advantages or pioneer new methods to overcome data limitations.

Data has become the limiting reagent in the modern AI formula, changing how investors must evaluate AI companies and allocate capital in this sector. The companies that succeed will be those that understand data not just as fuel for their models, but as strategic capital that must be acquired, protected, and leveraged with the same rigor applied to financial capital.

In the race to build frontier AI, those who master data acquisition, curation, and utilization will outpace those focused solely on model architecture or raw compute. The key question for AI-powered ventures is no longer just “How sophisticated is your algorithm?” but “What data do you have that others don’t?”

As token processing becomes a fundamental unit of AI production, understanding token economics will be essential for valuing companies in this new era. However, breakthrough innovations that fundamentally change these dynamics remain possible and could reshape the entire landscape. The continued advancement of artificial intelligence depends not just on better algorithms or more powerful hardware, but on humanity’s ability to generate, capture, and curate the data that teach machines to think.