The Great AI Excavation

How frontier model companies are mining for non-human intelligence.

The modern AI revolution represents a rare technological nexus where three critical streams — data abundance, computing power, and algorithmic innovation — have converged with extraordinary force. Like the California Gold Rush of 1849, this convergence has triggered a frantic scramble for advantage, with eye-watering sums invested on increasingly uncertain returns.

Much as the California Gold Rush transformed America’s economic landscape, today’s race to build increasingly capable artificial intelligence systems represents a fundamental reshaping of the digital economy. The architects of AI are mining something potentially more valuable than any mineral: non-human intelligence that could transform virtually every industry.

That said, unlike the Gold Rush, this is no speculative bubble, but instead an industrial-scale extraction of computational intelligence. Just as raw gold requires skilled artisans and manufacturers to transform it into valuable products, this digital intelligence requires application developers to refine it into solutions that address real human problems and justify the preemptive capex spend.

This digital gold, unlike its physical counterpart, multiplies in value the more it’s used. Yet it requires enormous resources to extract and refine. Those who control the mines, and those who craft the finest applications from their output, stand to redefine industries and power structures in the coming decade.

Key Takeaways

- Capital intensity creates natural oligopoly: Training the 2024 generation of state of the art (SOTA) frontier LLMs required $50-100M+ in compute resources, signaling a high-walled garden favoring well-funded players. Only 5-7 organizations globally possess the computational capital to compete at the bleeding edge, though there are plenty of followers once the path has been blazed (cough cough DeepSeek).

- The scaling plateau approaches: As extreme scaling yields diminishing returns, future competitive advantages will stem from algorithmic efficiency, data quality, and training innovation rather than just brute-force parameter increases.

- Inference economics dominate business models: While training costs represent one-time investments, serving millions of users daily constitutes the true cost of goods sold determining long-term viability.

- Supply chain constraints create strategic moats: GPU shortages have created a multi-tiered market where preferential access represents a significant competitive advantage.

- Efficiency innovations bend the cost curve: Sparse architectures, quantization, and retrieval augmentation are reducing costs 2-4x for equivalent performance, potentially democratizing capabilities once reserved for GPU-rich tech giants.

- Value chain emergence: The market is structuring around three layers – infrastructure providers of data and compute (Scale AI and NVIDIA), foundation model developers (OpenAI), and application builders – each with distinct economic profiles.

Mining Non-Human Intelligence

Foundation model companies like OpenAI, Anthropic, and Google DeepMind function essentially as sophisticated mining operations extracting a precious resource — non-human intelligence — from vast computational landscapes and ores of data. They deploy infrastructure investments consuming electricity at the scale of small cities to process digital ore that might yield valuable intelligence.

Early language models discovered “surface intelligence” through relatively straightforward approaches — statistical patterns in text that produced useful approximations of understanding.

This resembles early gold miners who began with placer mining, simply panning in streams to find eroded nuggets. As surface gains depleted, AI companies developed increasingly sophisticated techniques: reinforcement learning from human feedback, mixture-of-experts architectures, and specialized training paradigms.

In this analogy, NVIDIA stands as the quintessential “pick and shovel” business of the AI gold rush. Just as Levi Strauss made fortunes selling essential equipment rather than mining themselves, NVIDIA supplies the computational tools required by every AI developer. Data providers like Scale AI represent another essential supplier category — analogous to the prospectors who identified promising locations for mining operations.

Frontier models are not merely a technological breakthrough but a new class of capital-intensive digital infrastructure with unique economic properties: massive upfront R&D costs, relatively low marginal costs of deployment, and network effects from data and usage.

The race to build increasingly powerful AI systems has become one of the most capital-intensive technology competitions in history. This represents a fundamental shift in software economics, where traditionally the primary cost was human capital rather than computational infrastructure.

For investors, understanding this structure is critical. It illuminates barriers to entry, sustainable competitive advantages, and where value might ultimately accrue in the AI stack.

Historical Development: From Research to Industrial Scale

The Watershed Moment

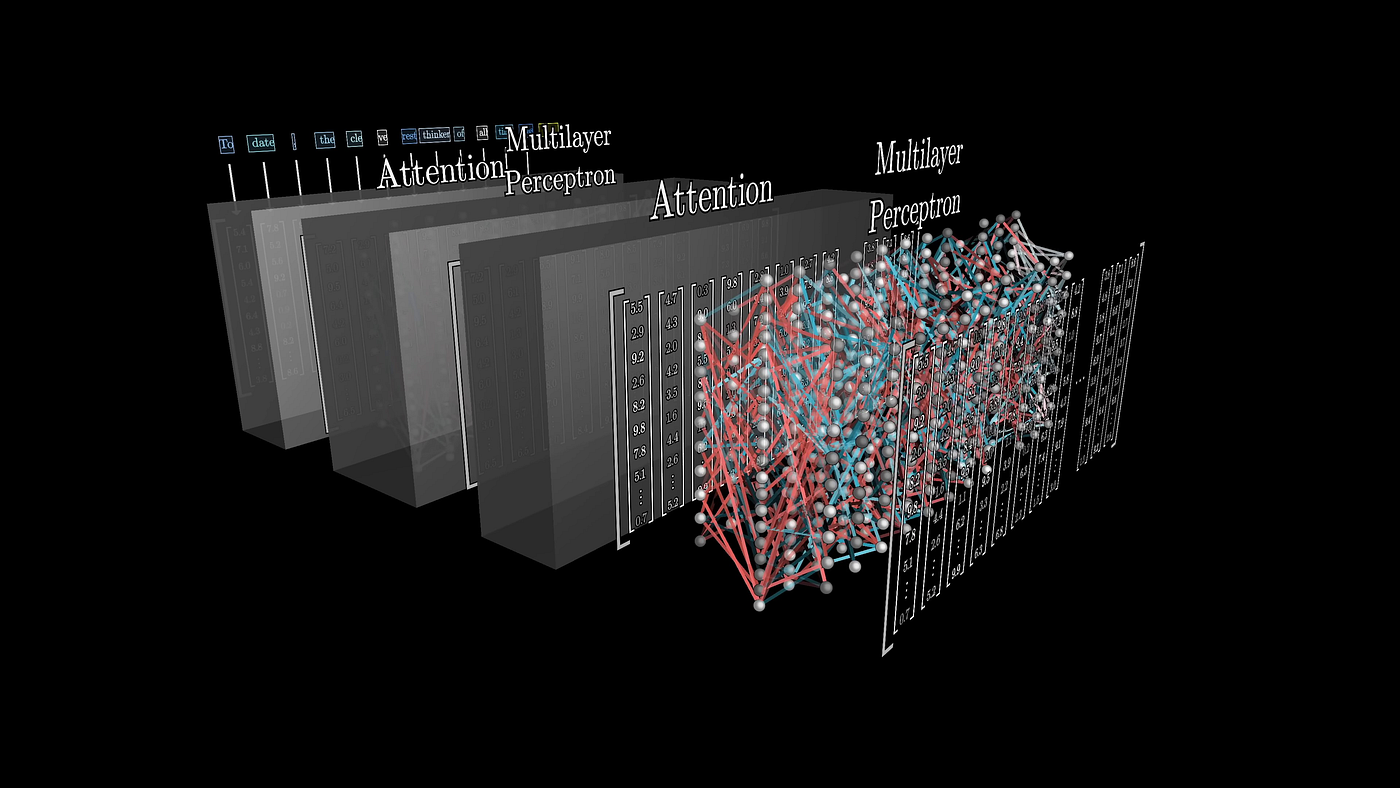

AI’s “steam engine moment” arrived in 2012 when AlexNet demonstrated the power of deep convolutional neural networks for image recognition. Its stunning victory in the ImageNet competition signaled that bigger models and more data would lead to better results. By 2017, Google researchers had introduced the Transformer architecture — a design uniquely suited to modeling relationships in text with unprecedented parallelism — which quickly became the foundation for all modern large language models (LLMs).

Late 2022 marked AI’s “Big Bang” in public consciousness when ChatGPT amassed 100 million users in just two months — the fastest consumer application adoption in history. This conversational assistant showcased a dramatic usability leap for LLMs, crossing the threshold from laboratory curiosity to world-changing technology.

The Industrial Complex Takes Shape

The subsequent gold rush has witnessed competitors racing to claim territory in an increasingly crowded landscape. Meta’s LLaMA outperformed OpenAI’s much larger GPT-3 on certain benchmarks. OpenAI countered with GPT-4, introducing multimodality and dramatically improved capabilities. Google entered with PaLM 2, while Anthropic launched Claude 2 with a 100,000-token context window. The pace continued unabated through 2024 and beyond, with ever more capable systems emerging at a dizzying rate.

Training GPT-3 required an estimated 3.1 × 10^23 floating-point operations — what a single GPU would take 355 years to execute — translating to roughly $4.6 million in direct costs. GPT-4 reportedly cost 10 – 20 times more yet delivered more modest relative gains. This pattern of diminishing returns has become a defining characteristic of the industry.

Training Cost Analysis: The Economics of Scale

Like prospectors upgrading from pans to hydraulic operations, AI labs have rapidly scaled to extract increasingly sophisticated intelligence. The equipment required has become specialized and capital-intensive, creating natural barriers favoring the well-capitalized few.

A single training run for GPT-4 reportedly consumed 25,000 NVIDIA A100 GPUs over 90 days, costing $50-100 million — a 10-20× increase over GPT-3 just three years earlier. Just as the gold rush quickly evolved from individual prospectors to industrial operations, the frontier AI landscape has consolidated around organizations with resources to deploy thousands of specialized chips and employ the brightest researchers.

Modern Training Infrastructure

Building a frontier-scale model requires immense planning, computational resources, and significant capital. Training durations for cutting-edge LLMs are measured in weeks or months of around-the-clock computing. Google’s PaLM underwent a final training run of about 64 days on Google’s TPUv4 pod infrastructure, while Meta’s LLaMA 3 was trained over 54 days on 16,000 NVIDIA GPUs – which costs ~$2 per GPU per hour, estimating the total cost of the training run (excluding hardware costs) at ~$50M.

Labs typically conduct:

- Exploratory runs on smaller models to tune parameters

- Full-scale runs on complete datasets

- Fine-tuning for alignment and specific capabilities

The economics vary significantly across organizations:

Cloud-Based Training (OpenAI, Anthropic)

- OpenAI relied on Microsoft Azure, with GPT-4’s training estimated at $60 – $100M

- Anthropic leveraged Google Cloud then AWS, with significantly lower but still substantial costs

Owned Infrastructure (Meta, Google)

- Meta’s Research SuperCluster reduced marginal training costs to primarily electricity and maintenance

- Google’s custom TPU infrastructure required high upfront investment but offered greater efficiency

By early 2025, top AI labs have effectively turned entire data centers into single training engines. Hardware configurations have grown to staggering scales: OpenAI using 20,000+ H100 GPUs, Meta expanding to two clusters of 24,000 H100s, and Google deploying thousands of advanced TPUs, with each top-tier GPU like the NVIDIA Hopper or Blackwell costing $25,000–$40,000; a full supercomputer of 10,000 GPUs runs into hundreds of millions in hardware alone.

Operational Economics and Efficiency

The cost to train frontier models includes both operational expenses (running hardware for months) and capital expenses (mostly chips are racks). While per-unit compute costs have slightly improved with newer hardware and improving supply chains, total costs have ballooned as models (as measured by parameter size) and datasets grew faster than efficiency gains.

Crucial developments moderating costs include:

- Improved parallelism and compiler optimizations

- “Compute-optimal” model sizing following DeepMind’s Chinchilla findings

- Mixed-precision training techniques

To bear these expenses, AI leaders have secured and announced unprecedented funding: OpenAI raised $40B mostly from Softbank, Anthropic is projecting $1B+ in spending for its next-gen model, Meta committed to building a 2GW data center with over a million NVDA GPUs, and Microsoft guiding to a 2025 Capex figure that exceeds $80B.

Infrastructure & Supply Chain: The Critical Bottleneck

If data is the raw material from which AI is refined, computing infrastructure represents the essential machinery of this extractive industry. Between 2022 and 2024, GPU availability emerged as the defining bottleneck in AI development.

NVIDIA’s flagship chips were produced in limited quantities and quickly purchased by well-funded players, creating what analysts described as a “huge supply shortage.” This resource constraint mirrored the gold rush, with companies scrambling for allocation of precious silicon rather than precious metals.

This parallel extends further when considering who profited most reliably and first: often not the miners themselves, but those who sold them equipment. Critical suppliers to the AI ecosystem like Scale AI or NVIDIA, with its market capitalization soaring past $2 trillion in 2024, echo Levi Strauss — and other entrepreneurs who made fortunes by providing essential tools rather than mining gold itself.

The supply chain dynamics created a multi-tier market for AI compute:

- Major cloud providers and tech giants with preferential supplier relationships

- Well-funded AI labs with cloud partnerships

- Startups and smaller players competing for limited remaining capacity

Inference Costs & Monetization: From Mining to Market

Once intelligence has been extracted through training, the challenge becomes distributing and monetizing this resource. While training costs dominate discussions, the daily operational expenses of running models at scale present the true test of business viability.

By January 2023, ChatGPT handled approximately 10-20 million queries daily, costing OpenAI roughly $694,000 per day — about 0.36 cents per query or 10 times more than a standard keyword search on Google. By 2025, these costs increased with more sophisticated models but were partially offset by efficiency gains.

Serving Innovations

Several innovations have helped manage inference costs:

- Quantization reducing model precision from 16-bit to 8-bit or 4-bit

- Caching for common prompts

- Model distillation creating smaller, faster variants

- Tiered deployments that reserve flagship models for complex queries

Monetization Strategies

The high inference costs have driven diverse monetization models:

- Subscription Services: ChatGPT Plus and Claude Pro ($20/month) providing steady revenue

- API Access: Usage-based pricing for developers

- Enterprise Integration: Microsoft bundling capabilities into Office 365 Copilot

Successful companies are finding ways to amortize inference costs across large user bases while charging premium prices for specialized capabilities. The initial wave of free services is giving way to sustainable business models balancing accessibility with profitability.

Future Innovations & Cost Trends: Bending the Curve

As with any extractive industry reaching maturity, AI economics will be shaped by innovations increasing efficiency and expanding access. Three approaches stand out:

Sparse Modeling (Mixture-of-Experts)

Models like xAI’s Grok-1 activate only a subset of parameters for each query, achieving trillion-parameter scale expertise at a fraction of computational cost.

Optimization and Advanced Algorithms

Techniques like FlashAttention, data pruning, and 8-bit precision training significantly reduce memory requirements and accelerate processing.

Retrieval-Augmented Architectures

Rather than embedding all knowledge in parameters, these models access external databases as needed, potentially matching the performance of much larger models.

The impact is already visible: what cost $100 to achieve in early 2022 (generating a million tokens) cost approximately $10 by late 2023 — a 10× reduction in just 18 months.

Looking ahead, three scenarios emerge:

- Optimistic: Continued efficiency gains deliver a 10× reduction in cost every 1-2 years, democratizing access.

- Moderate: Efficiency improvements offset much of the cost growth of larger models, favoring established players.

- Pessimistic: Without breakthrough efficiencies, costs escalate to $1 billion per frontier model by 2027, further concentrating capabilities.

For investors, improvements in efficiency represent potential market-restructuring forces. Organizations mastering “doing more with less” may disrupt the current oligopoly by democratizing capabilities that currently require enormous resources.

Industry Implications & Strategic Considerations

The economics of frontier AI reveal an industry in crucial transition. Unlike previous technology bubbles centered on speculative value, the AI race represents extraction of a critical and valuable resource — non-human intelligence that can be transformed into useful applications.

The Bifurcated Market

The AI landscape is splitting into two segments:

- Frontier models: Capital-intensive development requiring tens or hundreds of millions in R&D, dominated by 5-7 major players.

- Specialized implementations: More accessible development through fine-tuning or smaller purpose-built models, enabling a broader ecosystem of startups and applications.

The Three-Layer Value Chain

Value is accruing at distinct layers:

- Infrastructure providers: NVIDIA, Scale AI, and cloud platforms capturing substantial value through hardware and hosting (with 50-80% gross margins).

- Foundation model providers: Organizations developing core capabilities and licensing them broadly.

- Application layer: Companies embedding AI into solutions for specific industries or use cases.

This mirrors the gold value chain, where value accrued to equipment manufacturers, mining operations, and craftsmen who transformed raw materials. Microsoft’s Nadella framed their massive investments in these terms: “We’re making these investments not for vanity but because AI will fundamentally change every software category.”

Open vs. Closed Ecosystem Dynamics

Two competing philosophies have emerged:

- Closed, API-based models (OpenAI, Anthropic) monetizing through usage fees

- Open-source foundation models (Meta) creating value through ecosystems

Elon Musk’s approach with xAI reflects a sort of hybrid middle path, open-sourcing previous generation models, while leveraging the Twitter / X platform to train xAI models at lower marginal cost.

Conclusion: Not a Bubble, But a Resource Boom

The frontier model paradigm has shifted from “bigger is better” to “better is better”—where “better” might mean larger in some cases, but always with enhancements in data quality, training efficiency and alignment. The parameter race continues (now crossing the trillion mark), yet it’s accompanied by more nuanced strategies to ensure those parameters are put to good use.

Today’s AI companies increasingly resemble industrial mining operations, with massive capital investments, specialized equipment and teams of engineers working to extract a precious resource from the digital bedrock. Once refined, this intelligence is fashioned by application-layer companies into useful products for businesses and consumers. The infrastructure providers — NVIDIA for GPUs, cloud platforms for hosting, data providers like Scale AI for training — have become the true “picks and shovels” sellers of this gold rush, often reaping dependable profits regardless of which model ultimately wins the market.

For economies, governments and societies, the implications are profound.

Unlike gold, oil, and other finite physical commodities, artificial intelligence represents a potentially infinite resource that becomes more valuable as it is refined and deployed. Each new generation of models builds upon the last, creating a compounding effect absent from traditional extractive industries.

We are witnessing the earliest days of mining humanity’s most valuable resource yet: intelligence that amplifies and stands independent of our own.

Works Cited

- “ChatGPT: Optimizing Language Models for Dialogue.” 30 Nov. 2022. openai.com

- “Meta heats up Big Tech’s AI arms race with new language model.” 24 Feb. 2023

- “OpenAI releases GPT-4, a multimodal AI that it claims is state-of-the-art.” 14 Mar. 2023

- The Verge. “Google announces PaLM 2 AI language model, already powering 25 Google services.” 10 May 2023

- “List of large language models.” Accessed 2025

- “List of large language models.” (entries for Claude 2.1 and Grok 1). Accessed March 23, 2025

- “Anthropic releases Claude 2, its second-gen AI chatbot.” 11 July 2023

- The Verge. “Google launches Gemini, the AI model it hopes will take down GPT-4.” 6 Dec. 2023

- “Introducing the next generation of Claude (Claude 3 family).” 4 Mar. 2024

- “OpenAI rolls out GPT-4.5 for some paying users, to expand access next week.” 27 Feb. 2025

- SemiAnalysis (D. Patel). “The Inference Cost of Search Disruption – Large Language Model Edition.” 2023

- Business Insider. “ChatGPT could cost over $700,000 per day to operate – Microsoft is trying to make it cheaper.” 20 Apr. 2023

- Fabricated Knowledge. “The Coming Wave of AI, and How Nvidia Dominates.” 2023

- Heim, Lennart. “Estimating PaLM’s training cost.” 2022

- The Register. “Meta to boost training infra for Llama 4 tenfold.” 1 Aug. 2024

- “Anthropic’s $5B, 4-year plan to take on OpenAI.” 6 Apr. 2023

- Business Today (India). “The rise of Grok: Elon Musk’s foray into the AI chatbot

- ” 17 Mar. 202519. Reuters. “Meta and Microsoft Introduce the Next Generation of Llama 2.” 18 July 2023 (Meta press release)

- “Meta to 10x training compute infrastructure for Llama 4 – maybe deliver it next year.” 1 Aug. 2024

Disclosure

This document is not a recommendation for any security or investment. Nothing herein should be interpreted or used in any manner as investment advice.

Do not rely on any opinions, predictions, projections or forward looking statements contained herein. Certain information contained in this document constitutes “forward-looking statements” that are inherently unreliable and actual events or results may differ materially from those reflected or contemplated herein.

Certain information contained herein has been obtained from third-party sources. Although IronArc believes the information from such sources to be reliable, IronArc makes no representation as to its accuracy or completeness.